Software Engineers Don't Talk Anymore

Instead of asking each other questions, they consult large language models

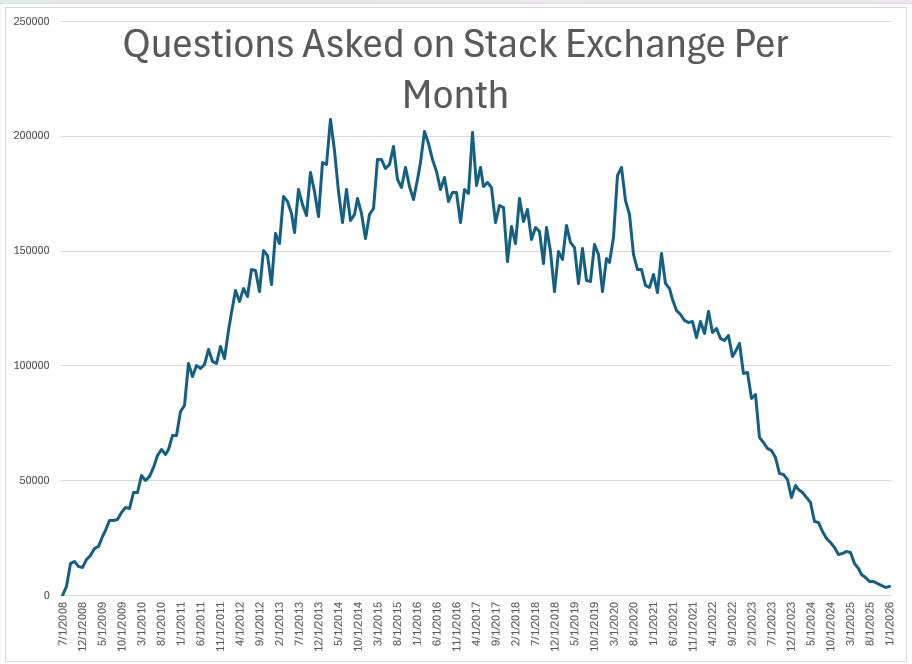

This shows the birth and near-death of a community. Not a small town with a folksy general store, but a community nonetheless. Starting in 2008, Stack Exchange became an important place for programmers, software engineers and others to exchange tips on how to code, debug code, etc.

There is a camaraderie among software jockeys that’s hard to understand from the outside. “Good code is like a love letter to the next developer who will maintain it,” Addy Osmani wrote in “Learning Javascript Design Patterns.” (Not a book in which I would have expected a mention of love letters.)

Now, though, Stack Exchange, a virtual place where that camaraderie lived, is nearly gone, done in largely by artificial intelligence. You can see in the chart that the number of questions asked on Stack Exchange peaked around 2013, slowly declined, spiked again when Covid hit, resumed its slow decline—and then went into a freefall shortly after the introduction in November 2022 of ChatGPT, the first highly capable large language model.

In other words, A.I. wasn’t the only factor in the near-death of Stack Exchange, but it was the big one. The number of questions asked last month looks like zero on the chart. It was actually 3,781.

I saw a version of this chart reproduced on Noah Smith’s excellent Substack, Noahpinion, in a post today called “Fall of the Nerds.” Smith wrote:

This represents the end of what Brad DeLong calls a “community of engineering practice.” Software engineers are no longer exchanging ideas with each other online; they are getting their ideas from A.I., which embeds the distilled understanding of every software engineer who ever lived.

There’s a story behind this story, which is how I created my chart. I clicked on the link that Noah provided, which took me to a post on X by Aayush Giri. I thought I should get the original data for safety’s sake, so I went to the Stack Exchange website, but couldn’t find any pre-packaged charts.

So I turned to artificial intelligence—doing exactly what I was writing about software engineers doing. Microsoft Copilot instantly generated a bunch of code* for me to plug into the Stack Exchange Data Explorer. Out popped the data.

The whole thing required zero, and I mean zero, programming skill on my part. Relevant to this article, it also required zero interaction with another human being. Which is efficient, but also a little sad.

*Here’s the code, for what it’s worth:

SELECT

DATEFROMPARTS(YEAR(CreationDate), MONTH(CreationDate), 1) AS Month,

COUNT(*) AS Questions

FROM Posts

WHERE PostTypeId = 1 -- 1 = Question

GROUP BY

DATEFROMPARTS(YEAR(CreationDate), MONTH(CreationDate), 1)

ORDER BY Month;

Fascinatng tension here between efficiency gains and the loss of communal knowledge-building. The irony is that LLMs were trained on exactly those Stack Exchange threads, yet now theyre replacing the very ecosystem that generated that training data in the first place.

This phenomenon is nothing unusual. Tools are created that make using complicated things easier. High level languages supplanted machine language, voice recognition supplanted typing, and now LLM is being used to generate code - just another higher level language. But, as Larry Tesla famously said, "Complexity is conserved." What is gained in rapid use can lead to errors when the result is not clearly specified, or is incorrectly applied to a problem. As with all tools, learning to use the tool well requires study, gaining experience, and critical thinking.